When typing, your fingers are like a superscalar processor

Sometimes bad analogies are the most interesting ones because they make you really think about the two things you’re comparing. So here’s one such analogy that made me think more about CPU microarchitecture.

January 6, 2019

Imagine that our fingers collectively act as a processor, and this processor executes instructions of the form type x where x is a character on the keyboard. So, to type the phrase hello world, our instruction stream would look like:

type h

type e

type l

type l

type o

type

type w

type o

type r

type l

type d

What characteristics does this processor have, and how does this processor relate to the ones found in our computers?

First, note that even though the given letters have to be typed in sequence (otherwise we’d end up with a typo), most typists will position their fingers in preparation to type letters further down the sequence. For instance, while my left hand is pressing the ‘E’ key when I type hello world, I’m positioning one of the fingers of my right hand to rest on top of the ‘L’ key. In essense our fingers are working in parallel even though the instruction stream must follow a serial order.

In processor terminology, this “parallelism within serial code” is known as instruction-level parallelism (ILP), and leveraging it is key to achieving good single-threaded performance in processors. Two of the most common techniques processors use to take advantage of ILP are pipelining and superscalar execution. Let’s see how well these terms describe what our fingers are doing.

Pipelining

Executing an instruction almost always takes several steps. In a classic 5-stage CPU pipeline, each instruction can be broken down into five steps:

- Get the instruction (instruction fetch or IF)

- Decode it (instruction decode, ID)

- Perform the instruction (execute, EX)

- Perform a memory acccess if needed (MEM)

- Write any needed results to a register (write-back, WB)

Each step of the pipeline is done on specialized hardware; that is, within a CPU, part of the silicon is dedicated to IF, part of it is dedicated to ID, and so on, and no part of silicon can do any other step of the instruction. If all steps are executed serially, most of the silicon spends its time idle:

| Time → | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

|---|---|---|---|---|---|---|---|---|---|---|

| Instruction 1 | IF | ID | EX | MEM | WB | |||||

| Instruction 2 | IF | ID | EX | MEM | WB |

The IF is only working in two units of time out of ten–20% utilization. Same for all other steps. The idea behind pipelining is that instead of leaving this piece of silicon idle, we let it get started on the next instruction:

| Time → | 0 | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|---|

| Instruction 1 | IF | ID | EX | MEM | WB | |

| Instruction 2 | IF | ID | EX | MEM | WB |

Are we typing in a pipelined manner? I don’t think so. I’d break down our instructions in the following manner:

- Instruction fetch/decode. I don’t really even consider this a step because it’s done by our brain so quickly that it’s really never a bottleneck or concern.

- Move finger to desired key

- Press key

- Hold key briefly

- Release key

This isn’t pipelinable because steps 2-5 are performed by the same finger. It’s not like one part of our finger is idle while the rest of that same finger is pressing a key; we instead have other fingers idle while one finger is working. Even though our other fingers can work on future letters/instructions when one finger is typing, this isn’t pipelining either because each finger is general-purpose; each finger moves, presses, holds, and releases a key and isn’t specialized for one step of the instruction.

Superscalar execution

Superscalar execution is like using a multicore processor to execute a single, serial stream of instructions. Each “core” is known as an execution unit. Below is two instructions executing on a processor with two execution units:

| Time → | 0 | 1 | 2 | 3 | 4 |

|---|---|---|---|---|---|

| Instruction 1 | IF | ID | EX | MEM | WB |

| Instruction 2 | IF | ID | EX | MEM | WB |

Of course, one can’t blindly parallelize things and expect them to keep working. In multithreaded programming, it’s the programmer’s responsibility to ensure everything works correctly through the use of locks, atomic operations, and so on. With superscalar execution, these worries are handled by the hardware–the processor detects instruction dependencies and schedules instructions (adding delays if necessary) such that all dependencies are done in time.

Our fingers are kind of like a superscalar processor. Each finger is like an execution unit because each finger is capable of executing a complete instruction–they are not completing some work for an instruction and then passing it onto another finger for further processing, which would be pipelining. So in some ways we process typing commands similarly to how a processor with 10 execution units would.

We’re not like any normal superscalar processor

Although “superscalar” may be a better descriptor than “pipelined” for our processor, it certainly isn’t a perfect fit. A typical execution unit has a certain subset of instructions it’s capable of executing, and can execute an instruction within that subset as long as all dependencies to that instruction are satisfied. This isn’t really true with our fingers, though. Suppose we’re currently using none of our fingers execept our left ring finger, which is in the process of typing S. If we issue an instruction to type P to our left pinky, we’d have to wait for S to finish typing. Perhaps you have a different keyboard layout or weird hands, but I at least know I would; my left pinky can’t reach P while my left ring finger is on S.

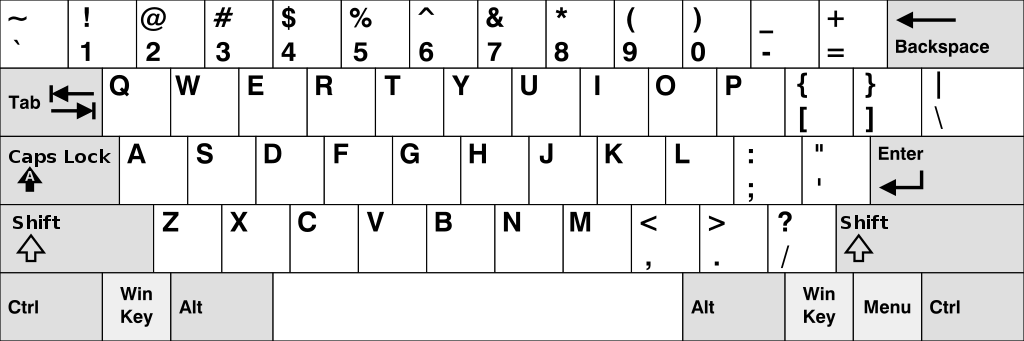

US QWERTY keyboard for reference; source

This doesn’t really make happen in a standard superscalar processor. My left pinky can clearly press the P key, so it’s capable of executing the instruction. It’s also currently unused–for example, it could execute type A without any long delay. The long delay we’re forced paying when issuing type P to our left pinky can’t really be explained away by an instruction dependency either–we’d have practically no delay if type P were issued to our right ring finger instead. We basically have a capable and available execution unit that’s blocked by another execution unit.

Execution units in processors are pretty independent, while our fingers are intertwined do to physical constraints.

Does any processor term fit better?

There are a some other terms from processor design that sort of apply to our situation, but none of them fit any better: out-of-order, VLIW, … At a high level, this is because people come up with terminology to succinctly describe techniques they’re frequently using, and the way our fingers exploit ILP isn’t a useful technique in processor design, so no phrase exists to describe it accurately.

Unlike with our fingers, there aren’t any really inherent constraints that force processor execution units to be coupled. And choosing to couple execution units leads to bad results all around:

- it’s bad for hardware designers because the processor’s instruction scheduler has to consider additional constraints, further complicating it

- it’s bad for software developers because those that want to squeeze the most performance out of a processor and maximize execution unit utilization now need to consider a lot more constraints

So unsurprisingly, this “tightly coupled superscalar” idea isn’t really implemented anywhere. The closest thing that comes to mind that exists in real-world processors is coupling between cores in multicore processors. AMD’s Bulldozer architecture used pairs of cores that shared a floating-point unit, and AMD’s current Zen/EPYC/Threadripper processors are composed of quadruplets of cores called CCXes, with each CCX sharing an L3 cache.

What’s the point?

In life, things happen in continuous time, so there isn’t really a clock rate, which is why I think this analogy is so interesting. Traditional definitions of pipelining and superscalar execution assume a discretization of time and don’t really make sense in the absence of one. This analogy suggests an alternative definition that doesn’t require a clock rate. For a processor that is working on multiple instructions at once, are the workers:

- Dependent on each other, doing some work and passing the instruction to another worker? If so, this is pipelining, and each worker is a pipeline stage.

- Indepedent of each other? If so, this is superscalar execution, and each worker is an execution unit.

(Many processors are both pipelined and superscalar in which case our workers are dependent on the other ones within the execution unit, but independent of workers from outside the execution unit)